Representative democracy and generative AI

Representative Democracy and Generative AI

One of the characteristics of this wave of AI is “generative”, the “G” in “GPT”. In the past, when people used AI to predict the chemical properties of molecules or play chess games, it was all about letting the AI complete a black-or-white task and return a simple result. Now you use ChatGPT to write articles and program or use Midjourney to draw, are letting AI help you “generate” content, return a prosperous scene.

For example, here’s a picture I drew with Midjourney–

The picture has a huge UFO hovering in the sky near a large pyramid. That UFO is lit up and seems to have many windows, clearly not a product of Earth’s civilization. The ground is a desolate desert landscape. Many people were looking up, in groups of three or five, standing or sitting, perhaps dozens or hundreds of them.

I am the only one who has seen this painting before submitting this column. So how much would you say I contributed to the creation of this painting?

Actually, my main contribution was the line, “UFOs flying in ancient Egypt. People look up.” Plus a couple of parameters.

It’s called ‘prompt’ and I want you to learn the word. It’s fine as a verb as a noun to indicate a request made (by) the AI, or it can be translated as ‘chanting’ or ‘incantation’.

The images are all AI generated.

But I have other contributions, perhaps more important ones.

The AI originally generated the following four images, all in the style of ancient Egyptian frescoes–

I don’t think the drawing is good enough, so I’ll let it be redrawn. Of course, all I had to do to do so was click a button; but it was a contribution after all.

Then it generated these four below–

This time I thought one of them was good, so I had it draw a few variations of that one–

Then I selected one of them and had it refined into the one we started with.

This process can be repeated many times, and AI isn’t afraid to toss it around until you pick one you’re happy with. You can make more detailed requests, specify more material in the picture, specify the style of painting, etc. …… But in the end, it is AI in the painting, you just make a request (prompt) after the selection.

Generating content with GPT is in the same spirit. It’s very different from traditional software applications. When you draw with traditional software, every detail of the picture is designed by you, and the computer is purely a tool. AI, on the other hand, now takes on almost all of the creative work. That’s why we have a feeling that times have changed and AI is coming to life.

Of course, the fact that you PROMOTE and make choices is also a form of creation, because the process reflects your insights and tastes - a professional sci-fi artist would certainly do a better job than me. We could probably also say “chanting is creating” “choosing is creating”.

But the point is that the process of creation is not entirely your own: you make the initial request, you make the final choice, but you don’t complete the process. You’re in control, but you’re not – and don’t need to be, and shouldn’t be – in complete control. But you do have a sense of control.

This ‘prompt-generate-choice’ relationship we have with AI is not new.

✵

Ask for it beforehand, decide if you’re happy with it afterward, and try to interfere as little as possible in between. Isn’t that what bosses do with their employees? Isn’t it the same for party A to party B? It’s all about the latter generating, but both parties co-create the end result.

The virtue of the boss and Party A in this relationship is to respect the professional skills of the generator.

It may not be easy to get the AI to paint exceptionally well, but what I find most interesting is that it’s even less easy to get the AI to ‘paint poorly’: you’d be hard pressed to get Midjourney to generate a poorly done painting. It’s trained on the work of countless professional painters and masters, and it’s at least professional-caliber as soon as it hits the ground running. You can certainly design the big picture of the painting, but no matter how good your big picture design is, the AI will always make sure that the details of the painting are at a professional level.

For example, if you look at the following painting of a house in the woods, the spell request is “architectural illustration with retro visuals ……”, but what are architectural illustration and retro effects? If you want to find a random artist he’s afraid it’s not very easy to draw to the extent of the following–

There is no need to mention the brushwork. This layout, this perspective alone, is so much better than I imagined myself. This is professional.

This kind of generation is a bit like residential renovation, where the most rational thing for you to do is to just say a general style and let the person do the specific design for you. You shouldn’t interfere too much with the design because you simply don’t understand it.

When we talked about the ChatGPT Mantra of the Mind, we said that you must give specific context when making requests; and the point here is that the requests should not be too specific either. If you don’t know much about the profession, then retaining a certain amount of ambiguity and letting the AI play on its own can often lead to better results.

✵

Actually, our Chinese policy language loves vagueness. For example, the leader asks to “increase efforts” to do something - may I ask how much effort is increased? Is it doubling staffing or increasing funding by 50%?

From a cynical point of view, the vagueness may be meant to be expressed euphemistically, without hurting face. For example, if you have an idea, if the leader says, “You have a good idea,” it could be in favor of the idea; if the leader says, “You have an idea, that’s great,” it’s shaping up to be an objection. Another example is that you are going to do a project, if the leader says, “Go ahead and do it! That may just be polite; but if the leader then add a sentence “General Manager’s Office is your strong backing! Then it is a real support.

From a conspiracy theory point of view, the ambiguity may be to leave no room for blame in case things don’t work out.

But from the point of view of “Prompt Engineering”, * Vagueness creates generative space. *

Mr. Liu Han has an interesting statement in his book Think Big. There are some ambiguous words in any law, for example, Article 51(1) of China’s Company Law stipulates that a general company shall set up a supervisory board, but “a limited liability company with a smaller number of shareholders or a smaller scale may set up one or two supervisors, and shall not set up a supervisory board”. What does it mean by “small number or small scale”? Why not specify a specific number?

Liu Han said that the removal of ambiguity “would make the legal rules unusually rigid and unable to cope with the ever-changing reality of life. In developed regions, companies with registered capital of less than 5 million yuan are considered to be small companies, while in less developed regions they are considered to be large companies, and forcing a specific number would make the law unworkable. That’s why Liu Han says**”Laws are often deliberately vague not to compromise, but to work.”**

Vague, this thing can be done. Ordinary management is also the same, the leader explains the subordinate task, it is best not to be everything everything commanded the kind of “micromanagement” [1], it is best to talk about the feelings after the end of the task [2]. Accounting for the task is prompt, talk about feelings is to make choices.

There’s a subtle thing here. On the surface, vague prompts may cost you a certain amount of control. But in reality, vagueness is necessary and you don’t really lose control.

✵

Thinking about these truths, we understand why some people would worry about AI replacing people, as there have been many bosses since the beginning of time who worried about their subordinates replacing him. But we better understand that such a worry won’t have any universal significance, just as the vast majority of bosses are not replaced by their subordinates.

Similarly, before worrying about whether AGI will enslave humans, countless wise men worried about whether the government would enslave the people. If you think about it, the vast majority of the people neither understand how government works nor the various economic policies, and don’t care about politics at all. Much of what the government does is highly specialized, and it is useless even if you are an insider if it does not want you to know about it, and even if you know about it and voice it out, not many people care. Of course, modernized countries have media monitoring, freedom of speech and democratic elections, but no matter what, they are still representative democracies, and it is the government that manipulates policies.

The relationship between the people and the government is very much like the relationship between human and AI: public opinion is the prompt, government operation is the generation, and election is the choice.

Then how can you know if the government is working for the people? Actually there is still no need to worry too much.

One of the key perceptions of political scientists is that the people are indeed relatively insensitive to various government policies themselves - but sensitive to policy change [3]. If everyone is in favor of a policy, that policy would have been introduced a long time ago; if everyone is not in favor of the policy, the policy won’t get out of office. This means that the timing of any new policy is pretty much the same as when the vast majority of people don’t care at all, and now it happens that there are so many more people explicitly in favor of it than explicitly against it. These explicit supporters and opponents may only make up 1% of the effective electorate, but they make a big difference.

These people are more knowledgeable, the AGI equivalent of experts and enthusiasts. Because no one else cares, these people are equivalent to representing public opinion. Politicians are careful to listen to these people - and they can’t afford not to, because there are still elections.

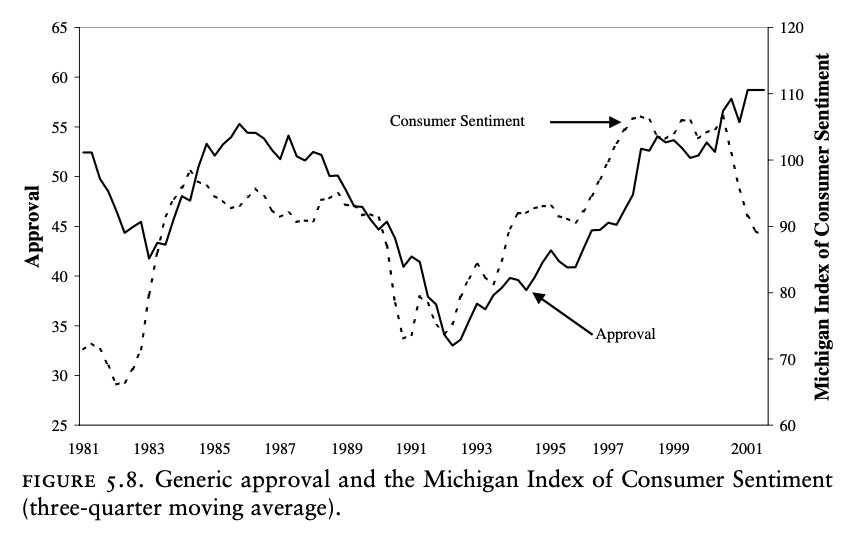

As it turns out, the people still know whether the government is doing a good job or not. Studies have shown that people’s support for the government is not so much affected by little things like the president’s philandering, but mostly by the economy. Here’s a chart that shows how the Consumer Sentiment Index and people’s approval of the government have changed over the course of U.S. history–

Consumer sentiment is the first thing to change when economic conditions get better or worse. Things are more expensive or cheaper, whether you have enough money to spend, in fact, you know in your heart. But this change in sentiment will not be immediately reflected in government approval ratings. Changes in approval ratings will lag a quarter or two behind changes in consumer sentiment - but that’s okay, approval ratings will change eventually.

In other words, if your government doesn’t get the economy going, the people will give you some time, but not for long. Once the approval rating reverses, and then in time for an election, then your current government will have to change.

Political scientist Christopher Wlezien has a theory of ‘temperature regulating machines’ that is particularly illustrative [4]. He says that public opinion is like a thermostat: yes, the people don’t understand how the economy works, in the same way that most of us don’t know how an air conditioner works, and we don’t even know how many degrees the optimal temperature of a room should be - but we do know whether the temperature feels colder or hotter right now, and we adjust the Temperature. It’s enough to turn on the heat if it feels too cold, or the cold if it’s too hot.

So to borrow the Lincoln quote, no amount of government can fool all the people for an extended period of time.

✵

Ancient China didn’t have a democracy, but it used to have a chancellor accountability system at different times in history, where the emperor only authorized and held accountable, and didn’t do any specific administrative work. For example, like the reign of Wenjing in the Western Han Dynasty and the years of Renzong in the Northern Song Dynasty, the emperor represented the public opinion PROMPT, the officials were responsible for generating it, and then the emperor chose based on the result, the country was actually running very well. Historically, Britain and Japan engaged in virtual monarchy as well.

In modern companies, the general meeting of shareholders and the chairman of the board of directors do not ask questions about the day-to-day affairs of the company, which is also a “prompt-generate-select” relationship.

- Let professionals do professional things, but the real boss can PROMOTE and make choices, which is not only a new trend in the AI era, but also an ideal mode of doing things. *

Note

[1] Principle 4: Management as Engineering

[2] The Nine Lies of Work 4: “Don’t Judge” Feedback and “Impress” Leadership

[3] James A. Stimson, Tides of Consent: How Public Opinion Shapes American Politics, 2004.

[4] Wlezien, C. (1995). The Public as Thermostat: Dynamics of Preferences for Spending. American Journal of Political Science, 39(4), 981-1000. https://doi. . org/10.2307/2111666

Highlight

Letting professionals do professional things, but real bosses can PROMOTE and make choices is not only the new ethos of the AI era, but also an ideal mode of doing things.