Q&A: will we fall behind in AI adoption?

Q&A: will we fall behind in AI adoption?

From Day Class: Business Strategy Explained

Reader 身斗小民: This ChatGPT firestorm has made many people reflect on why disruptive innovations always happen in the US. One of the concepts we have been taught since we were young is that if we fall behind, we will be beaten. Unfortunately, this time we have been slow again. The past is not forgotten. On the road of science and technology innovation, how can we avoid such things from happening again?

Reply from Wan Wan Gang -

If you imagine a country as a person, you would guess what he did right or wrong to bring about such a result. But a country is not a person, and a lot of things happen not out of will but out of evolution.

There are many differences between Chinese and American innovation. Among them there are institutional factors, for example, like OpenAI such capital-intensive startups need extremely large amounts of venture capital, then here there must be institutional safeguards, don’t be so hard to make the company you a ban so that people can’t realize ……

But in terms of this breakthrough for GPT, I don’t think the main factor is institutional. It’s probably more about culture and stage of development. Let’s single out one small side.

One of the most mind-blowing abilities of GPT is that it programs you based on your description. Especially after GPT-4 came out, it can be said that everyone can program now, and in the future, programming can be done mainly by natural language. So why does GPT have such a strong programming ability? Because OpenAI acquired a lot of high-quality training material for it from the Github website. It was Microsoft that first acquired Github and then gave Github’s code data to OpenAI.

Github is a community of programmers, called “the world’s largest homosexual dating platform”, programmers share code on it, answer each other’s questions, watch masters do projects, and exchange programming skills.

Note that programmers don’t get any income from doing these things on Github, sharing is free and gratis. The programmers aren’t thinking “I’m going to contribute to a future where the US has the best AI in the world”. They’re just doing it out of interest.

Github is not a special case. Before it, there was GNU, and there were free software communities like Linux, where people shared selflessly. And these people emphasize “copyright”, but they’re not talking about copyright to ensure that they make money, they’re talking about copyright to ensure that the software stays free: you can use my code, but you have to inherit my copyright - that is, to ensure that the code stays free.

This culture of freedom didn’t fall from the sky into Silicon Valley, it came from an earlier hippie culture. Hippie culture, on the other hand, has its roots in the social movements that took off in the United States in the 1960s ……

Simply put, these people wrote programs neither just to make a living nor for anything to build America, but like painting and music, as an art, a spiritual pursuit. Even as early as the 1970s, there were many programmers who believed in the spiritual credo that “computers are alive”.

In fact, there are a lot of programmers on Github, but most of them are probably looking for a piece of code to use, not to share and exchange ideas.

Our culture of pragmatism is understandable. If you all day 996, your mother-in-law all day worried about you can not pay the mortgage, your wife ordered you to get off work must hurry home, go home must be good with the children, your boss all day staring at you want this and that, you engage in a scientific research project a small half of the time are writing reports and do travel reimbursement …… you which have the heart to think about what the art of programming?

Some people engage in technology is more to enjoy the charm of technology itself, some people engage in technology is to make a living. So who would you say is more likely to make breakthrough, unexpected discoveries. Great technological breakthroughs are the product of spare time and money, and China’s housing prices are so expensive and life is so tiring, so how much spare time and money can we have.

This is not all a matter of monetary incentives, nor is it a matter of willingness to give, it is a matter of the stage of social development.

Reader Zhou Yi: ChatGPT can’t be used directly in China, if there is no equally powerful domestic language model to fill the gap for a long time, will the first year of AI in China be postponed, and according to the evolutionary speed of AI, will it result in a comprehensive backwardness and be marginalized by the network formed by AI? Will it result in total suppression in the future competition of AI?

W3C replied –>

OpenAI just opened ChatGPT to Indian users a few days ago, but not to Chinese users yet. Enterprise users call GPT through API interface, this OpenAI itself has no restrictions, OpenAI can even open a server specifically for your company. But for well-known reasons, Chinese companies can’t legally use OpenAI’s API at the moment.

This is only good for Baidu and bad for China.

Baidu just released its own large-scale language model called Wenxin Yiyin. It has no programming capabilities, no APIs, and far less conversational capability than GPT-3. If we have to wait for Baidu to get good at AI before we use it, China will fall behind in AI adoption.

At the end of this year, GPT-5 may come out; another two years may be AGI. by that time, foreign industries have been fully AI, is it still waiting for Baidu on the Chinese side? I think the Chinese people deserve the best AI.

But I don’t think China will be fully suppressed in the AI competition. I’ll show you screenshots of a few key papers we mentioned last week when we talked about “The Enlightenment Moment of Language Modeling”-

Look at how many Chinese names are on this. It can be said that half of today’s GPT talent is Chinese. after the GPT came out of a variety of popular applications on the Internet there are also a lot of Chinese people to do. It’s just that they all work at Microsoft, Google and Stanford.

So if China is lagging behind in AI, it’s definitely not because the Chinese can’t do it. Since Chinese people are very good, we believe that we will not be behind in AI forever.

From Day Lesson: AI is responsible for prediction, people are responsible for judgment

Reader Fung Cheuk Lam: I would like to ask Wan Sir, what is your opinion about data science or AI, and what do you suggest if your son is going to take a master’s degree at the moment?

Reply from WanSir -

I would probably advise him to take data science. I would like to tell you an ongoing story.

This wave of large language modeling, represented by GPT-4, has a byproduct.

It killed a discipline called ‘Natural Language Processing (NLP)’.

Many universities have NLP as a major, and many large companies have specialized NLP R&D teams.NLP is a cross-discipline of computer science, AI, and human linguistics, and has previously been considered to be the counting on to achieve general artificial intelligence.NLP studies how to make machines understand human language, and its applications include machine translation, speech recognition, search engines, intelligent assistants, and so on. Over the years, the field of NLP has achieved a lot under the efforts of countless people ……

But now those are no longer meaningful.GPT uses a completely different solution idea - unsupervised learning.The Transformer architecture and the “enlightenment” “emergence” that will happen around 2022 have automatically perfected all the problems that NLP wants to solve but fails to do so perfectly. It turns out that AI doesn’t need to learn language according to the language rules that humans help it find, it turns out that machines automatically find all kinds of language laws that we know - including those we don’t know. Translation, speech recognition, search engines and intelligent assistants are all features that GPT has natively. And it also automatically masters a whole bunch of functions, including logical reasoning, a small amount of book learning, automatic classification, and so on, as well as functions that we didn’t realize we had.

Comparing GPT to natural language processing is like comparing AlphaZero to the Go routines summarized by human players. It turns out that relying on humans to summarize the rules and then teaching them to computers is a dumb way to make human thinking slow down computer thinking. It turns out that letting the computer directly and violently solve is the most fundamental, fastest and best way.

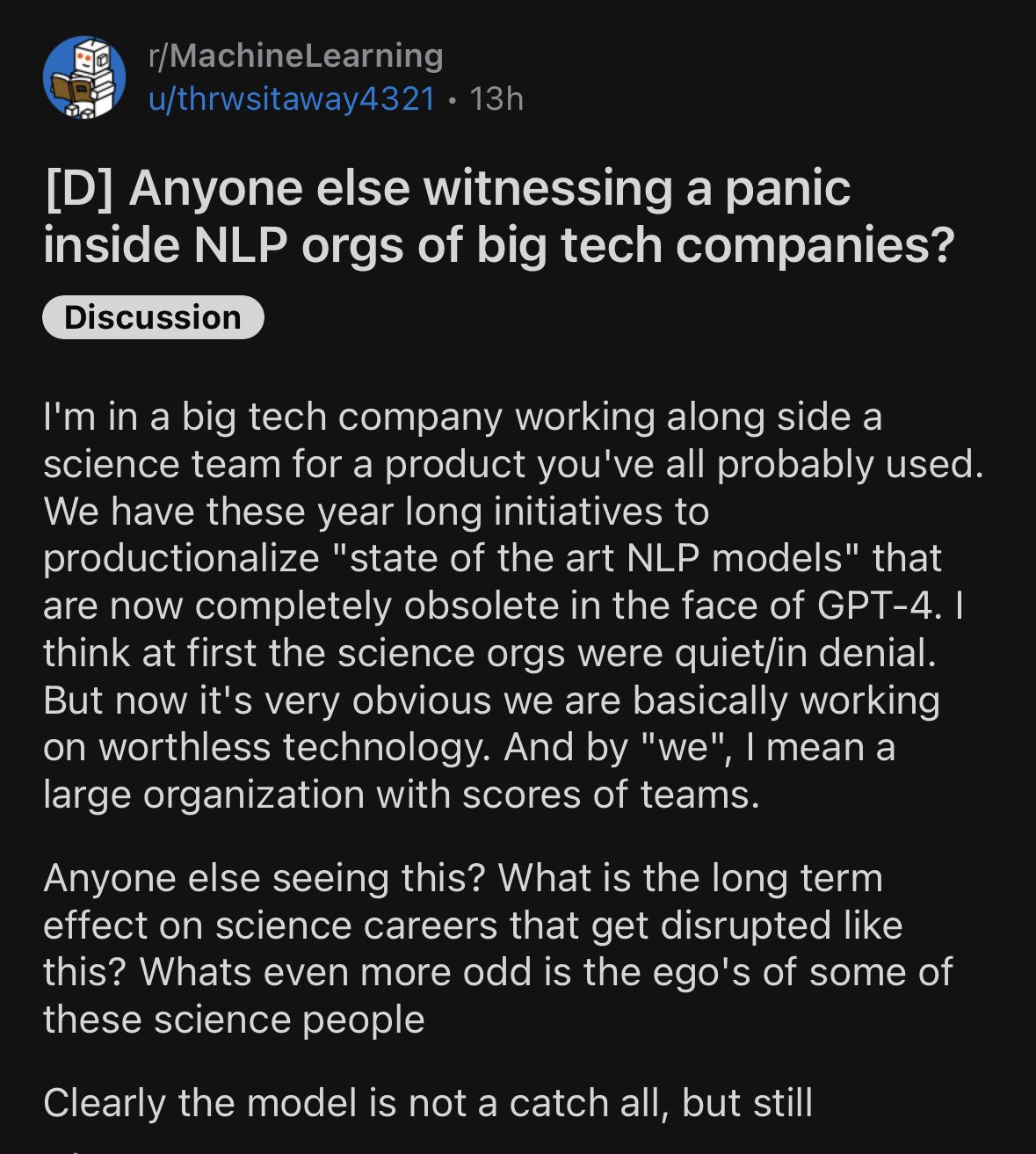

Human players can continue to learn Go routines; after all, the game itself is fun. But where do NLP developers, professors and students go from here? Pessimism is already permeating the online community - the

The initial attitude of some practitioners was one of denial - just like the initial reaction of a terminally ill patient …… But once GPT-4 came out this week, the situation has become very clear.

I just read a couple of days ago that the “22nd Chinese Conference on Computational Linguistics” will be held in Harbin on August 3, 2023, and papers are now being solicited. The topics include machine translation and multilingual information processing, question and answer systems, machine reading comprehension, text generation, text summarization, human-computer dialogues, etc. etc. …… All of them have been solved by GPT in a better way. This conference has been going on for over 30 years, maybe this is their last year. Maybe the theme of the conference should be changed to “GPT is here, how do we find jobs again”.

It’s sad to see that the technology you’ve been working on for more than a decade or even decades is no longer relevant overnight. In fact, it’s not just one discipline, NLP, that’s being disrupted; other AI disciplines, such as the Bayesian school of analysis, are also in crisis. The famous linguist Chomsky published an article in the New York Times a few weeks ago slamming ChatGPT, resulting in a comment section full of scolds.

My friends, a new era has come and gone, and many things are obsolete. The most absurd thing is that the GPT didn’t mean to eliminate those disciplines, it probably didn’t even think about those disciplines, it was just a lucky technological mutation that led to all this. What’s it to you if it destroys you?

That’s why it’s dangerous to bet on a technology that’s too narrow. Going back to the earlier question, data science has a much wider range of applications than just AI. even if AI takes over data analytics in the future, you can still use the knowledge to help others understand the data and make decisions based on it, so maybe it’s relatively safer.

From Day Lesson: A Smarter Society

Reader Ming, 70man: A society that uses AI to coordinate and make accurate predictions sounds like a planned economy, except that the planning is more accurate and rational. Is AI a technology that favors centralization of power? Is AI a technology that favors centralization of power? Will it become a “super-planned” society in the future?

Reply from Wan Wansteel

A few years ago, some Internet gurus said that now that AI predictions are so powerful, we can go back to a planned economy - they were totally wrong. The essence of a planned economy is not prediction, but command and control.

I predict that blue clothing fabric will be popular next year, so I plan to produce more blue cloth this year, this is not a planned economy. Planned economy is that the state assigns your factory the task of producing so much blue fabric this year, the purchase price and the number of purchases are fixed, and it is good for you to fulfill the task. In the former case you are active, in the latter case you are passive.

AI forecasting is to better face the uncertainty of the market; planned economy is to eliminate uncertainty.

One of our columns in the fourth quarter was called “What is “profit” anyway”, which talked about economist Frank Knight’s theory of market uncertainty. The fundamental source of market uncertainty is the uncertainty of human desires: this year I like red, next year I’ll like blue, I’ll like whatever I like, and you can’t control that.

Market economy, is the entrepreneur guess what consumers like, and can even invent new favorite. This is essentially gambling, betting wrong you will lose a lot.

In a planned economy, people give up gambling and say simply the state arranges for me to produce what I produce. You do reap a fair degree of security and stability, but you have to cede autonomy, you have to obey everything from above.

So do you think the “higher-ups” will actively predict what the people will like next year, so that they can make better plans? No, they won’t. What a joke, now I am in charge of the economy, I let what to produce what to produce, then naturally what I produce what they should like! Wouldn’t it be better to have everything at my disposal and in order?

Only a market economy can make the people’s days colorful. The economy must die as soon as it is regulated, and AI can’t change that.