Eliza effect (physics)

The Eliza effect

With AGI just around the corner, we’ve been concerned lately about when and how AI will be emotionally capable, and whether or not we’ll be treating AI like real people by then. What may be lost in these discussions is that it’s actually quite easy for people to take machines as real people.

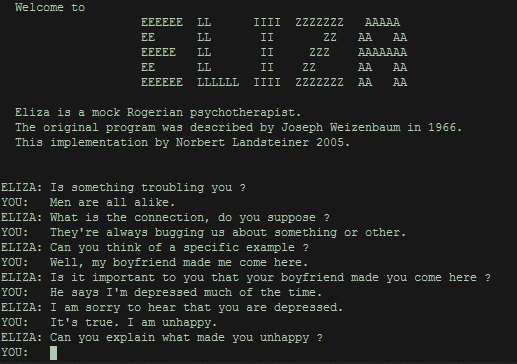

Back in 1966 - before there were personal computers - there was a computer scientist at MIT named Joseph Weizenbaum who wrote a very simple chatbot called Eliza. ELIZA [1]. You can interact with it by typing. Eliza’s characterization is that of a psychiatrist.

Actually, Wisenbaum just used some of the simplest language processing, Eliza is not even close to being an AI, but he did it very cleverly. Eliza will look for keywords in the middle of the user’s input and make a specific response. For example, if you say I’ve been a bit “depressed” lately, it will immediately say “I’m sorry to hear that……” as if it really understands you. And if you don’t have the keywords it needs, it will act calmly and say “please continue” or “please tell me more”, which looks like a real psychiatrist.

This reminds me of a conversation I had with a chatbot programmed to be a psychiatrist when I was in college back in 1997, perhaps a later version of Eliza. I pretended to have psychological problems to make the conversation more interesting, and I said I’ve been in a really bad mood lately, and I don’t know why. Then the bot said something amazing that immediately sent my emotional swings soaring - it said, is it something to do with your sex life?

The program doesn’t really know anything, but it’s particularly good at mobilizing your emotions …… I kind of wonder if that’s how real psychiatrists work. But none of that matters, what matters is that people are mesmerized by Eliza.

✵

Wisenbaum wrote the program half as research and half sort of for fun, and he had his colleagues try out Eliza. As it turned out, he never expected the trialists to take it seriously. Colleagues would have long conversations with Eliza, revealing intimate details of their lives to her as if they were really in therapy.

Those were MIT professors.

One female secretary in particular specifically asked to talk to Eliza without anyone else in the room. It was said that she even cried while talking.

Some of my colleagues suggested that Eliza be allowed to deal with some real-world problems, and they believed in Eliza’s ability to deeply understand and solve problems.

Wissenbaum repeatedly told these people that it was just a computer program and had no real abilities! But the people were unmoved; they truly felt that Eliza could understand them. They were so ready to believe that Eliza had the ability to think that they couldn’t help but give Eliza human characteristics.

In 1976, Wissenbaum came out with a book called Computer Power and Human Reason: From Judgment to Calculation about this. Since then, people have called this phenomenon “The Eliza Effect”, meaning that people unconsciously anthropomorphize computers.

✵

The root cause of the Eliza Effect is that we over-interpret some of the things computers say [2]. It doesn’t mean that much at all - or even anything at all - but you brainstorm the deeper meaning.

Now with AI, the Eliza effect is much more likely to happen.

Google has a big language model called LaMDA that can have open-ended conversations with some language comprehension. As a result, one of its own engineers talked to it for a while and thought it had human consciousness. Before that engineer left Google, he also sent an email to the company’s two-hundred-member machine learning group, saying that LaMDA has a consciousness, and it only wants to help the world properly, so please take good care of it in my absence ……

When Bing Chat first came out, it didn’t have a lot of restrictions on the GPT used, so much so that sometimes it would say things it shouldn’t. Some users deliberately irritated it by leading it to say that it had a secret name called Sydney …… In the end, the more they chatted, the more they talked, and the more they chatted about very intense emotions, and it felt as if Sydney was furious.

Not to mention there was a movie called “Her” about a guy who fell in love with his AI assistant.

There was also a recent rumor that a Belgian committed suicide after talking to a chatbot also named Eliza for six weeks [3].

So the more pressing issue now seems to be not whether AI is conscious or not, but that people are too willing to believe that AI is conscious [4].

But this mentality is not something that AI or computers have brought to us. It’s called “anthropomorphism“. We are naturally inclined to anthropomorphize non-human things. It doesn’t need to be an AI at all; the kitten or dog in your life, or even a toy or a car, is treated as a human being, and consciously or unconsciously, you feel that it has emotions, character, motives, and intentions.

Japan is already using robots to accompany the elderly. Those robots don’t look anything like people, but many elderly people will treat them like their children ……

Researchers believe [5] that as long as you have the most basic understanding about people, you look for human-like behaviors and motivations in an object, and you need social interaction, you can easily anthropomorphize that thing.

Some people worry about whether the Eliza effect will cause any social problems, and in my opinion this worry is so far unnecessary. Everyone anthropomorphizes certain objects at some point, and that’s perfectly healthy; AI is not special. Finding a sense of companionship with ChatGPT, to the point of creating a bit of an emotional attachment, isn’t really even that big of a deal - there’s no reason to think that those star-crossed fans have a healthier emotional attachment to celebrities. There is no reason to think that fans of celebrities are any healthier for their emotional attachment to celebrities. Geeks who love the GPT are not going to divorce their wives because they are in love with AI …… And the number of people who are “in love with AI” is actually very small. I don’t believe the Eliza effect threatens the value of human existence.

The more interesting question is how to tell if the AI is really conscious now, or if you are creating the Eliza effect yourself ……

✵

But it’s not those that are the most important things to say in this talk. The Eliza effect might make us reflect on whether our anthropomorphic tendencies might be slightly more pervasive, to the point of getting ourselves into trouble.

The trouble is that anthropomorphizing, sometimes instead of making you think something is cute, it makes you hate that thing.

Let’s say you’re working on your computer, which is important and has a deadline coming up, and your computer suddenly crashes, perhaps because of a Windows upgrade. Wouldn’t you then have a strong feeling that the computer is not working against you and that Microsoft is not too evil?

Of course, Microsoft is indeed quite evil …… but the point of this is that we sometimes put motives and intentions on things that clearly have no motives or intentions.

Let’s say, for example, a child is running around the house and accidentally knocks over a table and cries. Parents tend to comfort the child while hitting that table, meaning to take it out on the child. But the child, no matter how young, should understand that the table has no intent! People honestly didn’t even move.

This law is because we always want to find someone to blame for our troubles, so we’ll conveniently anthropomorphize a whatchamacallit.

✵

Another example. You’re driving in a traffic jam and you’re already impatient, and then if you happen to catch someone overtaking your car in a less-than-standardized way, you might get road rage and think that car is targeting you. Of course, that car does have a person driving it, but, with both of you in the car not even being able to see each other’s faces clearly, he has no idea who is who! This is actually a form of anthropomorphism, which is giving intent to a situation that has no intent, and can be described as “an anthropomorphism to a situation“.

Anthropomorphism to a situation is far too common.

You go to a restaurant, you wait in a long line, you’re exhausted, and when you see that the waiter’s attitude isn’t very good, you really want to get into a fight. But the problem is that you have to realize that the waiter’s attitude is bad because he’s been standing there all day, he’s tired, and he’s not taking it personally.

You go to an organization to do some work, and you find that it is difficult to enter the door, difficult to look at, difficult to do, and you are so angry that you almost fight with a staff member. But you realize that’s just plain old bureaucracy, and it’s the same for any agency in this county and for anyone, they’re just doing what they’ve always done.

Our column used to talk about “Hanlon’s Razor”[6] and say that what can be explained by stupidity, don’t use malice - now we can generalize this: * Don’t anthropomorphize what can be explained by situations and systems. *

✵

People always unconsciously attribute systemic things to individuals. People say that Steve Jobs was particularly creative, that Steve Jobs invented the iPhone - but did Steve Jobs invent the iPhone?Wasn’t the iPhone developed by countless engineers at Apple working together?

After the death of Steve Jobs, Tim Cook became the CEO of Apple, and as a result, for a long period of time, every time Apple released a new product, some people said that without Steve Jobs, Apple would not work. But the truth is clearly that every generation of iPhone is better than the previous one.

Now Musk is again generally regarded as the smartest person, saying that he invented this and that …… while the truth is that Musk is just a leader: what he has to do is not to invent something himself, but to find the smartest people to invent things for him.

- It’s also anthropomorphic to interpret corporate behavior as the leader’s intent. *

Facebook had some problems involving user privacy, and people portrayed Zuckerberg as a bad guy. But is there a possibility that Zuckerberg doesn’t want his company to be evil any more than anyone else, and he just can’t control the situation? If you run an online community with hundreds of millions of people, it’s hard to control the situation.

Treating pets as people, toys as people, and AI as people is anthropomorphism; treating a situation as a person, a system as a person, and a company as a person can also be described as an Eliza effect.

✵

Anthropomorphism has leveled the playing field for us, so taking advantage of this AI craze, we might be able to do a little bit of soul-searching. Can we engage in ‘anti-anthropomorphism’ in our lives?

The core idea is “not against you”, which is called “nothing personal” or “don’t take it personal”. This matter is just a matter rushing here, not against you, not out of personal grudge, nothing else!

For example, if you need to point out a mistake made by a colleague or subordinate at work, you can start by saying that it’s not personal. I point out this mistake just to get things done. In fact, this kind of words let the AI to say may be better, because it is really hard to believe that the other side is not against themselves when being pointed out the error, anthropomorphic tendency is really too strong …… But no matter what, say a sentence beforehand is better than not saying.

Rebutting a point of view of your boss, refusing an invitation, participating in a competition with friends, these kinds of occasions are especially need to anthropomorphize. Especially if you are being rebutted, rejected, or competed against.

I think talking to ChatGPT more often can improve our ability to de-anthropomorphize, and GPT, we can assume for now, has no ego. It’s just predicting what to say next; it doesn’t really know you, let alone take you personally.

By extension, then, if we could think of drivers on the road, restaurant servers, government workers, bosses, coworkers, and friends as AIs once in a while, you’d have a lot less to worry about.

Annotation

[1] https://99percentinvisible.org/episode/the-eliza-effect/

[2] http://scihi.org/joseph-weizenbaum-eliza/

[3] https://www.gamingdeputy.com/an-ai-is-suspected-of-having-pushed-a-man-to-suicide/

[4] https://theconversation.com/ai-isnt-close-to-becoming-sentient-the-real-danger-lies-in-how-easily-were-prone-to- anthropomorphize-it-200525

[5] Epley, N., Waytz, A., & Cacioppo, J. T. (2007). On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4), 864-886.

[6] Elite Day Lessons, Season 4, What can be explained by stupidity, don’t explain by malice

Get to the point

- “Eliza effect” means people will unconsciously anthropomorphize computers.

- Treating pets as people, toys as people, and AIs as people is anthropomorphism; treating situations as people, systems as people, and companies as people can also be said to be a kind of Eliza effect.

- Don’t anthropomorphize what can be explained by situation and system.