AI Topic 6: AI for Prediction, Human for Judgment

AI Topic 6: AI is in charge of predictions, people are in charge of judgment

What exactly are the human capabilities that cannot be replaced by AI? Everyone needs to think about this question. Three Canadian economists have an insight in the book Power and Prediction that I see as potentially a guiding principle for the division of labor between AI and people.

- Simply put, both parties make decisions together, where AI is responsible for prediction and people are responsible for judgment. *

To illustrate this, let’s look at a real-life case. The online taxi company Uber has been testing self-driving cars.In 2018, Uber’s self-driving car struck and killed a pedestrian in Arizona, causing a heated debate.

When you analyze the accident carefully, you will find that six seconds before the impact, the AI had seen an unknown object in front of it. It didn’t make the decision to brake immediately because the probability of it determining that object to be a person was very low - although that probability wasn’t 0.

The AI has a judgment threshold where it will only brake if the probability that the object ahead is a person exceeds a certain value. Six seconds before impact, the probability did not exceed the threshold; by the time it finally saw it was a person later, it was too late to brake.

We divide the braking decision into two steps: prediction and judgment. the AI’s prediction may not be accurate, but it has predicted that the object may be a person, and it has given a probability of not being zero - the next problem is in the judgment. Whether or not the brakes should be applied at that probability is the judgment that leads to tragedy.

Uber AI uses a threshold judgment method, which can be understood, if the probability of any one of the objects in front of a person is not 0, the AI chooses to brake, it will keep stepping on the brakes on the road, the car can not be driven. Of course you can say the threshold is unreasonable, but there is always a judgment required here.

Note that it is precisely because of AI now that we can do this kind of analysis. There have been so many incidents of human drivers hitting people before, and no one ever bothered to analyze whether that driver made a prediction error or a judgment error. But this kind of analysis is actually perfectly reasonable, because the two kinds of errors are very different in nature - ask the driver, did you simply not see anyone ahead of you, or did you already sense that the object ahead of you could be a person, but you didn’t feel like that was a very high likelihood, and because you were in a hurry, you felt that that small of a probability was acceptable, and you just drove Did you drive past it?

Did you make an error in prediction or an error in judgment?

✵

Decision Making = Prediction + Judgment.

Prediction, which tells you what the probability of various outcomes occurring is: judgment, which is the extent to which you are willing to accept each outcome.

Earlier in our column we talked about Tim Palmer’s Prime Doubt, which specifically talks about how to make decisions based on predicted probabilities [1]. You have an outdoor party this weekend, and it’s your decision whether or not to rent a tent for that purpose to prevent rain. The weather forecast tells you that there is a 30% probability of rain that day, that’s the prediction. It is your judgment whether or not rain damage is acceptable in the face of such a probability.

What we were talking about was that as long as the cost of taking action (i.e., the tent rental) is less than the loss (i.e., how much trouble the rain will cause you) times the probability, you should take action and rent a tent to prevent getting wet. But in the context of this talk, note that this “should” should be read as a suggestion to you.

It’s still up to you to take action - because ultimately, you’re the one who’s going to take the loss, not the AI, not the person who’s advising you with the formula. Whether it’s the Queen of England or your mother in law, whether it’s a big deal or a small deal for a guest to get wet is not for the AI to know - that’s really a subjective judgment on your part.

AI is very good at predicting weather probabilities, but judging the consequences of a weather condition requires more, specific, and perhaps only you-know-what information, so it’s you who should be making the judgment, not the AI.

*Decision making in the age of AI = AI’s predictions + human judgment

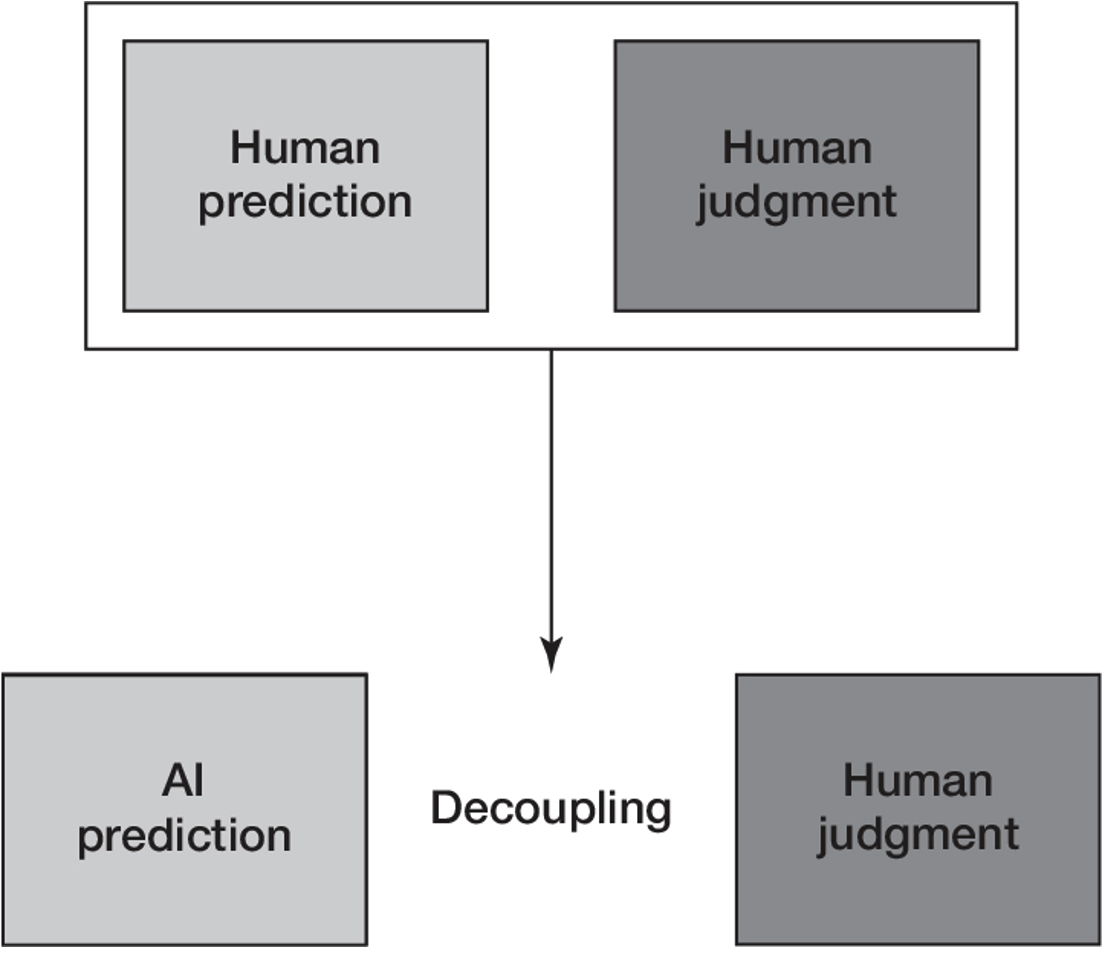

In other words, we should decouple prediction from judgment. Whereas all decisions used to be made by a human in charge of prediction and a human in charge of judgment, now it should be AI in charge of prediction and a human in charge of judgment -

We recognize that AI is smarter than people, but we also know that it is people, not AI, who really bear the risks and experience the consequences.Predictions are objective, judgments are subjective.AI can’t usurp people’s judgments, and people shouldn’t dictate AI’s predictions either.AI and people have their own positions, and the division of labor is clear.

✵

How can this division of labor be implemented? * One way is to artificially set an automatic judgment threshold for AI. *

For example, in a self-driving car, we can stipulate that the AI must apply the brakes when it predicts that the probability that the object in front of it is a human being is higher than 0.01% - or 0.00001% will do, anyway, you have to have a non-zero value -. This judgment criterion, this line, is certainly not prescribed by the AI itself, but pre-programmed by the human. You program that line into the AI, but it has to be a human being who gives that programming instruction - because only a human being can judge the value of a human life: for an AI, the value of a human life can’t be estimated in an objective way.

In fact, we’re already using that judgment. It used to be that you went to the store to buy something with cash, and it had to be predicted and judged by the store cashier herself whether that cash was a real bill or a fake bill. Now you swipe a credit card, and whether that credit card is real or fake is now not a decision made by the cashier, but by the credit card networking system based on an algorithm that predicts and judges. The algorithm assesses what the probability is that the card is a fake card (prediction), then looks to see if that probability is above a certain line (judgment) and decides whether or not to decline the card. That line is not calculated by any AI, but is drawn beforehand by some committee of human beings - because it is the human beings who offend the customers by drawing the line too low, and it is the human beings who bear the loss by drawing the line too high.

We’ll face all sorts of similar things in the future, and the book Power and Prediction suggests that such judgments are best performed by an agency like the FDA, as if evaluating whether a new drug is ready for market.

✵

- Another way to quantify the judgment is to put it into money. *

You’ve rented a car and you’re going somewhere rather far away, and you have two routes to choose from. The first is relatively straight, just drive honestly, there’s not much scenery on the way. The second one will pass through a scenic area, which is a pleasure for you, but there are pedestrians in the scenic area, which will increase the probability of an accident. If the AI directly tells you which road has a high probability of having an accident, you may still not be able to judge it well.

It is more convenient for the AI to tell you that the insurance premium for renting a car by taking that road in the scenic area is one dollar higher than by taking the first road. This one dollar insurance premium represents the AI’s prediction of the difference in risk between the two roads.

Now the judgment is over to you. If you think the scenery is more important to you than $1, then you take the scenic route; if you’re not that interested in the scenery, you save the $1.

You see, the AI doesn’t need to know you, and it can’t know you - it’s your choice between the dollar and the scenery that reveals your preferences. In economics, this is called ‘Revealed Preference’: many of a person’s preferences are supposed to be unspoken, but as soon as they’re tied to money they can be.

✵

- Prediction decoupled from judgment is empowering for people. *

It used to be that if you wanted to go and drive a cab, you didn’t just have to know how to drive. First you had to learn how to recognize the road, you had to know what the shortest route from any point A to any point B in the city was - in the words of this talk, you had to be able to predict - in order to be able to drive a cab. Now that AI has taken over the route prediction thing, you just need to know how to drive and you can go and drive an online taxi.

With AI, people will judge and make decisions. But that’s not to say that decision-making is easy, because there’s a learning curve of judgment.

More judgment in life is neither drawn by a committee nor quantified into money, it is the individual who must analyze the situation specifically. How do you judge how good this outcome is for you, or how bad it really is, and whether you can live with it or not?

Some of it may be something you’ve read or learned from someone else, like if you’ve heard that getting burned with a red-hot soldering iron hurts, you’d be willing to avoid getting burned at a very high cost. But hearing about it is not as good as experiencing it. You can only know how much it hurts if you’ve actually been burned. Judgment, there is a large subjective component to it.

And the ability to judge is becoming more and more important. A US statistic shows that in 1960 only 5% of jobs required decision-making skills, by 2015 it was already 30% of jobs, and they were all high-paying positions.

Because only people know how much it hurts, people are not machines. And judgment, and the decision-making power that comes with it, is essentially a form of power - AI has no power. That’s where the title of the book, Power and Prediction, comes from.

✵

When AI takes over prediction, the exercise of decision-making power at the societal level and at the organizational level of companies becomes a new issue.

Here’s an example. Because it was known that lead was harmful, starting in 1986 the US government banned the use of leaded drinking water pipes in new buildings. However, the pipes in many old buildings contain lead, so there is a problem of retrofitting the old pipes. But retrofitting is very expensive and labor-intensive, and old pipes don’t all contain lead, so you have to dig them out first to find out if they do, so who’s home to dig up first?

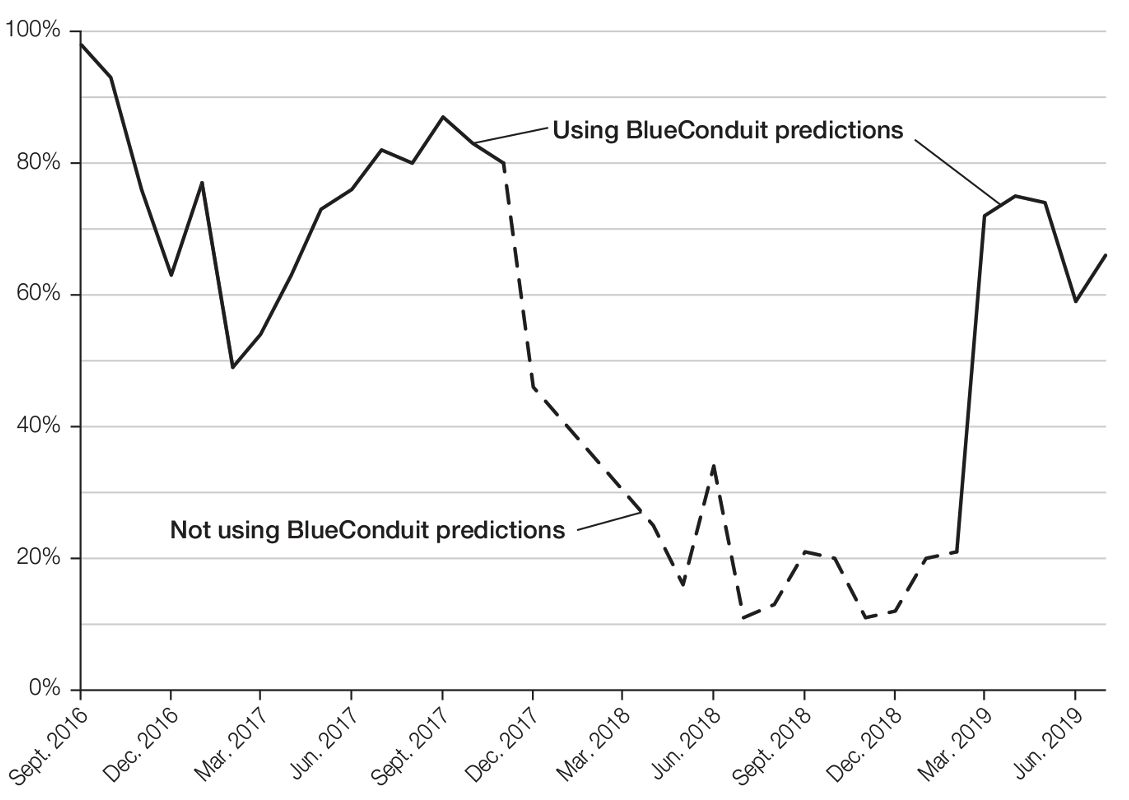

In 2017, two professors at the University of Michigan came up with an AI that could predict which home’s water pipes contained lead with 80% accuracy. The city of Flint, Michigan, used the AI. it all worked out fine at first, with the city arranging for construction crews to replace water pipes in individual residents’ homes based on the AI’s predictions.

After the project went on for a while, some residents quit. They questioned why my neighbor’s pipes were replaced but not mine. In particular, those in the wealthier neighborhoods said, “Why are we replacing the pipes in the poorer neighborhoods first, don’t we pay more in taxes?

With all these complaints, the mayor of Flint decided not to listen to the AI, but to go door-to-door. As a result, the decision-making accuracy dropped from 80% to 15%. …… After some time, a bill was introduced by the U.S. court, which said that the decision to replace the water pipes must be made by listening to the AI’s prediction first, and the decision-making accuracy was only improved back again -

The reasoning behind this whole thing is that AI changes decision making. There is no AI prediction, because only the government can predict and judge who should be replaced first, the decision-making power is completely held in the hands of politicians; with AI, the people or communities can judge for themselves, especially the separation of powers in the U.S., the judicial system can also be a direct message, politicians are not good.

✵

To whom should the decision-making power really belong? Economically speaking, of course, it should belong to whoever makes the most efficient decisions for the organization as a whole.

In the past, when there was no distinction between prediction and judgment, decision-making power should often be given to front-line people because they had direct access to key information and their predictions were the most accurate - as the saying goes, ‘let those who hear the fire command’.

Now that AI has taken over predictions, human decision-making is all about judgment. It’s time to consider, letting those whose personal interests are most relevant to the company’s interests make decisions, or letting those most affected by that decision make decisions, or letting those who best understand the consequences of the decision make decisions …… All of this means organizational change.

Change also means that the former predictor is now transformed into a judge, or an interpreter.

For example, it used to be the role of weather forecasting organizations to improve the accuracy of their forecasts, now with AI their primary role is not to predict the weather-perhaps it becomes explaining the forecasts to the public and to government agencies.The AI says that there is a 5% chance of tornadoes next week, and the government officials don’t understand how much damage that 5% represents, perhaps you the meteorologist can explain it. The weather station of the future may be more about providing human services, such as advising people how to make travel plans for tomorrow, rather than just forecasting a probability of snow.

Another example is that radiologists used to have the primary task of looking at charts and predicting conditions. Now that AI’s ability to look at charts has surpassed that of humans, then radiology departments will have to figure out other services, perhaps explaining the condition to patients, and perhaps radiology departments should not continue to exist ……

✵

As we said earlier in this AI topic, giving decision-making power to AI will make it hard for people. as powerful as AI is, we don’t want to enshrine it as a god - it is better for AI to play the role of an assistant or a priest honestly, and the clapper board should still be in the hands of human beings.

The book “Power and Prediction” provides a solution, that is, AI is only responsible for prediction, so that people master judgment. In my opinion, this division of labor is very reasonable, and I hope this talk can bring you some comfort.

Notes

[1] Prime Suspect 5: How to make decisions based on probability

Highlight

Decision making in AI era = AI’s prediction + human judgment

Prediction is objective, judgment is subjective. ai can’t usurp human judgment, and humans shouldn’t dictate ai’s predictions. ai and humans each have their own place, and there is a clear division of labor. There are two ways to implement this division of labor:

- artificially set an automatic judgment threshold for AI.

- quantify the judgment into money.