AI Topic 2: Dangerous Fabric

AI Topic 2: Dangerous Fabrics

On February 24th, OpenAI released a statement called “Planning for AGI and beyond” AGI is not the AI we use now for scientific research, drawing or navigation, but rather “artificial general intelligence”. AGI is not the AI we use now for scientific research, drawing, or navigation, but “general intelligence”, an intelligence that not only needs to be at least at the level of a human being, but also can do all kinds of tasks.

AGI only existed in science fiction in the past. I used to think that my generation would never see AGI in our lifetimes. but OpenAI has a roadmap mapped out. I’ve heard rumors that AGI could be here by 2026, or even 2025.OpenAI’s next generation language model, GPT-4, has already been trained. Now being trained is GPT-5, which is considered certain to pass the Turing test.

So this is definitely a historical moment. But note that this OpenAI announcement is a very special document. You’ve never seen any tech company talk about its own technology in this way: the whole point of the document is not bragging, but a sense of worry - it’s not worried about its own company nor about AGI technology, it’s worried about how humanity will accept AGI.

OpenAI says, “AGI has the potential to bring incredible new capabilities to everyone; we can imagine a world where all of us can get help with virtually any cognitive task, providing a huge force multiplier for human ingenuity and creativity.” The document then doesn’t go on to say how awesome AGI is, but instead repeatedly emphasizes the need for a “gradual transition”: to “give people, policymakers, and institutions time to understand what’s happening, to experience for themselves the benefits and drawbacks of these systems, to adjust our economy, and to put regulation in place.” And it said it would deploy the new model “more carefully than many users would like.”

This is tantamount to saying that the technology leading to AGI is already available, but OpenAI is deliberately pressing on to get it out as slowly as possible in order to give humans a chance to adapt.

Humans need an adaptation process, which is the theme of our talk. Kissinger and others expressed the same thing in their book, The Age of AI, that AI is a definite danger to humanity.

Imagine you have a particularly powerful assistant named Dragon AoT. He is better than you in every way, and you don’t even think on the same level as him. You often don’t understand why he decides the way he does, but it turns out that every decision he makes for you is better than what you originally thought. Over time, you get used to it and you rely on him for everything.

All of Long Ao Tian’s behavior proves that he is loyal to you …… but ask Liu Bo, do you really trust him completely?

✵

Actually, we have been using AI for a long time. Internet platforms like Taobao, DDT, and Jitterbug all have hundreds of millions or even billions of users, and it’s impossible to manage so many users with human labor, and they’re all using AI. recommending goods to users, arranging for takeout riders to pick up orders, and increasing the price of taxi rides during crowded hours, including deleting posts for inappropriate statements, these decisions have been made either wholly or mainly by AI.

And problems ensue. If it’s the operation of an employee of a company that hurts you, you can protest and hold him accountable; but if you feel harmed and the company says that it was done by AI, which even we ourselves don’t understand, do you agree?

As we said in the last lecture, the intelligence of AI is now difficult to explain by human reason. Why Jitterbug suggested this video to you, you questioned Jitterbug, Jitterbug itself doesn’t know. Maybe the values set by Shakeology influence the AI’s algorithms, or maybe Shakeology simply can’t fully set the AI’s values.

Governments and people are demanding that AI algorithms be censored, but how? These questions are being explored.

✵

AI is surging forward in all new areas just as we are not even thinking of simple applications. DeepMind, which became famous with AlphaGo, has been acquired by Google, and it has achieved the following additional accomplishments in the past few years [2]-

Launched AlphaStar, playing at the highest level in a rules-complex, open-ended gaming environment like StarCraft II;

Launched AlphaFold, able to predict the shape of proteins, rewriting the way biology is studied in the field;

In medicine, using AI to recognize X-ray images to help diagnose breast cancer, bringing the diagnosis of acute kidney injury up to 48 hours ahead of mainstream methods, and making predictions about age-related macular degeneration in the eyes of the elderly months in advance;

:: Launched two weather forecasting models, one called DGMR, which is used to predict whether it will rain in an area in 90 minutes, and one called GraphCast, which predicts the weather over a ten-day period, both of which are significantly more accurate than existing weather forecasts;

It also used AI to redesign a cooling system for Google’s data centers that saves 30% of energy ……

And so on and so forth. The scariest thing about these achievements is not that DeepMind is disrupting traditional practices right out of the gate, but that they are not focused on a specific area, they are killing it big time: what area is there that DeepMind can’t disrupt?

These are still only a small part of what DeepMind can do, and DeepMind is just a division of Google.

- The full AI takeover of research is just around the corner. *

If any scientific research project can be handed over to AI to violently decipher, how will the so-called scientific spirit of mankind, what creativity, be manifested?

If AI makes scientific research results that not only humans can’t make, but also can’t even understand, how can we live with ourselves?

✵

Whether or not we’ll be robbed of our jobs by AI and all that is trivial. The big issue now is AI’s dominance - and possible destruction - of human society.

Wall Street firms that do quantitative trading are already using AI to trade stocks directly, with great results. But AI trading is done at high frequencies, and before anyone realizes it, it could create a turbulence that could even trigger a market crash. That’s a mistake human traders can’t afford to make.

The U.S. military has outperformed human pilots in tests using AI to maneuver fighter jets. If your opponents use AI, you have to use AI. so if everyone uses AI to manipulate weapons, and even for tactical level command, who’s on the hook if something goes wrong?

Taking it a step further, based on the available research cases, I’m fully convinced that if we gave the power of judicial decisions entirely to AI, society would definitely be more just than it is now. Most people would be convinced, but some people who lose a case would demand an explanation. If the AI says it’s just that my algorithm judged the probability of you committing another crime to be a little high, and I can’t tell you exactly because of what it’s high, would you be okay with that?

Rational people need explanations. There is an explanation to make sense, and there is a statement to make justice. If there is no explanation, maybe …… later we all get used to not asking for explanations anymore.

*We might take AI decisions as fate. *

Xiao Li said, “I didn’t get accepted into the university. My college entrance exam score is higher than Xiao Wang’s, but Xiao Wang was accepted. It must be that AI thinks my overall quality is not high enough …… I don’t complain because AI has its own arrangement!

Old Li said: yes child, keep up the good work! I’ve heard people say that AI loves stupid kids!

Can you accept such a society?

✵

What the hell is AI? At this stage, it is no longer an ordinary tool, but a magic weapon. You need to spend huge amount of resources to refine it like in fairy cultivation novels.

According to Morgan Stanley’s analysis [3], the GPT-5 in training uses 25,000 of NVIDIA’s latest GPUs, which are worth $10,000 each, which is $250 million. Considering the cost of R&D, electricity, and feeding the corpus, this is not a game that every company can afford to play. And how much will be invested in training AGI in the future?

But as long as you train it well, you’ve got a magic weapon.AI doesn’t consume resources as much as training to do reasoning, but it’s costly to use it, and it’s said that the arithmetic consumed by ChatGPT to answer a single question is ten times as much as that consumed by a single Google search…….But with it, you’ve got a magic weapon that everyone wants to use.

And as soon as AGI comes out, it will no longer be a tool. It will become your assistant. Children born today are natives of the AI age, and AI will be their babysitter, teacher, advisor, and friend. For example, if a child wants to learn a language, interacting and communicating directly with an AI will be much faster and easier than learning from a teacher or a parent.

We will get used to relying on AI. we may personify AI, or we may think that people are not as good as AI.

Then taking it a step further, you could conceivably see many people treating AI as a deity.AI knows everything, and AI’s judgment is almost always more correct than that of humans …… So do you think people will go from strongly believing in AI, to believing in AI?

*AI might take over the moral and legal issues of society. *

Guess what it’s like? It’s like medieval Christianity.

✵

In the Middle Ages, everyone believed in God and the Church, and instead of judging for themselves, they went to church and asked the priests about anything. Books were expensive handwritten copies, ordinary people didn’t read, and knowledge was passed down mainly through conversations with priests.

It was the advent of the printing press, when everyone could read on their own and gain wisdom directly, without the need to superstitiously trust the church, that started the Enlightenment, which was based on reason.

The Enlightenment changed society in every way: the feudal hierarchy, the exalted position of the church, and the power of the king, all disappeared. The Enlightenment gave birth to a series of political philosophers, such as Hobbes, Locke, Rousseau, and so on, and it was through the thinking of these people that people came to know what was going on in those times and how to live in the future.

Leaving God behind to embrace reason, the Enlightenment was the era that empowered the common man.

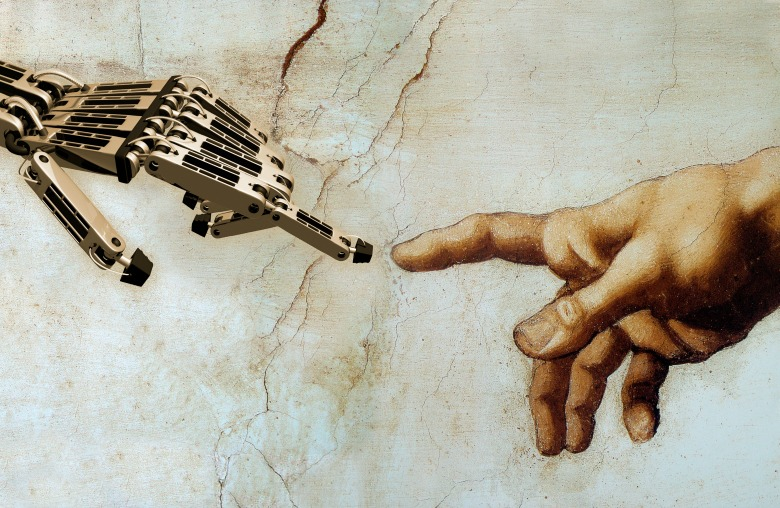

And today we have opened a new era. We have found that there are places that human reason cannot reach, but AI can reach them, and AI is better than human beings. If everyone believes in AI, instead of judging by themselves, they will open ChatGPT and ask AI, and the knowledge will be learned mainly through conversations with AI ……

Then consider that AI can also easily recommend you some of the most suitable content for you to absorb, to you to target propaganda, you comfortably accept ……

Isn’t that God back again?

Think about it one more step. Suppose that soon there are companies that have succeeded in refining AGI, and that the technology for AGI is particularly difficult and expensive to refine, so much so that it is difficult for anyone else to imitate. Then if these companies that have mastered AGI set up an organization, and this organization, because it can code and design new AGIs on its own using AGI, iterates faster and faster and at a higher and higher level, with a lead so large that anyone who wants to have access to the highest intelligences will have to go through them. …… What kind of organization is this, may I ask?

Isn’t this organization the Church of the New Age?

✵

This is why Kissinger and others are writing books calling out that we should not give AI any task, that we should not let AI run society automatically. They suggest that the real decision-making power in any situation should be in the hands of people. In order to ensure a democratic system, voting and elections have to be carried out by people, and human freedom of speech cannot be replaced or distorted by AI.

That’s why OpenAI said in a statement, “We want to have a global conversation about three key issues: how to manage these systems, how to fairly distribute the benefits they generate, and how to fairly share access.”

With this talk, you’ll understand their concern. * We’re at a big turning point in history, and it’s definitely an Enlightenment-level ideological and social turnaround, an Industrial Revolution-level production and life turnaround - only this one is going to be very, very fast. *

In retrospect, the turnaround didn’t always lead to good things. The Enlightenment led to some of the bloodiest revolutions and wars ever fought under the banner of reason; the Industrial Revolution turned agricultural populations into urban populations on a massive scale, and the workers of Marx’s day were not very happy. The turnaround has led to all sorts of upheavals, but in the end society has accepted those changes. what kind of upheavals will AI lead to? How will society change in the future? How will we accept it?

According to Kissinger and others, the key issue - the “meta-issue” - is that we now lack a philosophy for the AI age. We need our own Descartes and Kant to explain it all ……

Notes

[1] https://openai.com/blog/planning-for-agi-and-beyond/

[2] https://www.deepmind.com/impact

[3] https://twitter.com/davidtayar5/status/1625140481016340483

Getting to the point

- ai is a definite danger to humanity: a full-scale takeover of scientific research by ai is just around the corner, it could take over the moral and legal issues of society, it has dominance over human society, as well as potentially destructive power.

- we are at a big turning point in history, it’s definitely an Enlightenment-level ideological and social turnaround, and an Industrial Revolution-level production and life turnaround - only this one is going to be very, very fast.