AI Topic 11: The GPT's Undercard and Fatalities

AI Topic 11: The GPT’s Undercard and Fatalities

This talk starts us off by focusing on GPT. we talked earlier about how large language models have enlightenment, emergence, and chains of thought, and that’s why they have such an amazing variety of features now. But we still need to further understand GPT: how exactly does it compare to the human brain? What are its limitations? Are there things it’s not good at?

At this time of historical change, GPT is progressing very fast. Products, services, and academic papers are popping up all over the place, and progress is measured in days, so that what you knew a month ago may already be outdated. But the book we used for this talk is awesome, Stephen Wolfram’s What Is ChatGPT Doing … and Why Does It Work? (and Why Does It Work?), just published March 9, 2023

This book will not be outdated. That’s because it’s not about the general functioning of the GPT, but about mathematical principles and philosophical musings - and math and philosophy don’t go out of style.

Wolfram may be familiar to you; our column has been devoted to him before [1]. He was a man of God. He invented the Mathematica software, he made the WolframAlpha website, he came up with a computational language called the Wolfram Language, and he came up with a whole new view of the whole of physics. You ask me to list the three smartest people alive in the world today, and there’s got to be Wolfram in there - and I’m not sure who the other two are.

GPT and all AI on the market today are essentially neural networks. Wolfram has been concerned about neural networks for more than forty years, he programmed himself to study neural networks as early as 1983, and he has recently done a lot of research with GPT. His book has been endorsed by OpenAI CEO Sam Altman as the best explanation he’s ever seen.

In fact, Wolfram not only makes clear the underpinnings and destiny of the GPT, but also offers an insight that could be considered astounding.

✵

I’ll start by showing you a small case study to deepen your understanding of the GPT.

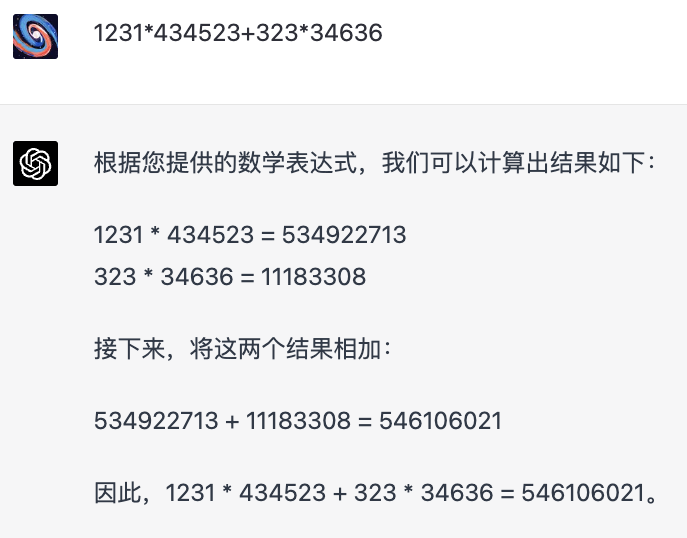

I had GPT-4 do the simplest of calculations, purely typed off the top of my head: 1231 × 434523 + 323 × 34636 equals what?

GPT-4 did some fussy math and gave the result as 546106021, but if you find a calculator, the correct answer is 546085241.

What’s going on here?GPT-4 has strong reasoning ability, I let it do OU questions it can get it right sometimes, how come it can’t get such a simple calculation question right?

Of course it doesn’t know how to do all kinds of calculations. If you ask it to calculate 25+48, it can definitely do it correctly …… The problem is that it can’t do it for calculations with especially long numbers.

The fundamental reason is that GPT is a language model. It’s trained in human language, and it thinks a lot like the human brain - and the human brain is not very good at doing this kind of math. Wouldn’t you have to use a calculator if you were asked to do the math?

GPT is more like a human brain than like a normal computer program.

✵

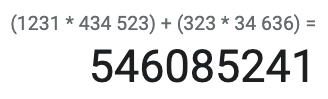

At its most basic level, the function of a language model is nothing more than a ‘rational continuation’ of the text, to put it bluntly, a prediction of what the next word should say.

Wolfram gives an example, such as this sentence, “The best thing about AI is its ability to …… (The best thing about AI is that it has the …… ability)” What’s the next word?

The model finds five candidate words based on the probability distributions in the text it has learned: learn, predict, make, understand, do, and then it chooses a word from among them.

There is a certain amount of randomness depending on the ‘temperature’ that is set. It’s as simple as that.GPT generates content by repeatedly asking itself: based on these words so far, what should be the next word?

The quality of the output depends on what is meant by ‘should’. You can’t just think about word frequency and syntax, you have to think about semantics, and especially about what the relationship between words is in the current context. the Transformer architecture helps a lot, and you have to use the chain of thought, and so on.

Yes, GPT is just looking for the next word; but as Altman said, isn’t man just surviving and reproducing? The most basic principles are simple, yet all sorts of amazing and beautiful things can come out of them.

The primary method of training GPT is unsupervised learning: show it the first half of a text and ask it to predict what the second half will be. Why does this training work? Why is the language model so close to the human mind? How many parameters are needed to make it intelligent enough? How much corpus should it be fed?

You might think that OpenAI has already figured out all these questions and is deliberately keeping them secret from the public - it’s actually quite the opposite. Wolfram is pretty sure that there are no scientific answers right now. No one knows why GPT works so well, and there’s no first principle that tells you exactly how many parameters a model needs - it’s all an art, and you can only go with your feelings.

Ultraman also said that asking is heaven’s favor. what OpenAI should be most thankful for is luck.

✵

Wolfram talks about some of the features of the GPT, and I see three of the luckiest discoveries in it - the

**First, GPT didn’t let humans teach it any rules like ‘Natural Language Processing (NLP)’.**All linguistic features, grammatical or semantic, are discovered by itself, which is, to put it bluntly, a violent decipherment. It has been proved that letting the neural network discover all the speakable and non-speakable language rules by itself, without human intervention, is the best way.

**Second, GPT shows a strong ability of “self-organization”, that is, the “emergence” and “chain of thought” we talked about earlier.**You don’t need to artificially arrange any organization for it, it can grow all kinds of organizations by itself.

**Third, and perhaps one of the most amazing things is that the GPT seems to be able to solve ostensibly quite different tasks with the same neural network architecture!**It stands to reason that there should be a drawing neural network for drawing, a writing neural network for writing articles, and a programming neural network for programming, and you’d have to go and train them separately - but the fact is that these things can be done with the same neural network.

Why is that? I can’t say. Wolfram speculates that those seemingly different tasks are actually ‘human-like’ tasks that are essentially the same - the GPT neural network just captures the universal ‘human-like process’.

This is just speculation. Given that none of these amazing features have a rational explanation at this point, they should be counted as major scientific discoveries.

*These are GPT’s bottom line: it’s just a language model, but at the same time, it’s amazing. *

✵

So why is GPT not so good at math? Wolfram talks a lot about it, and I’ll give you a brief summary in a chart below.

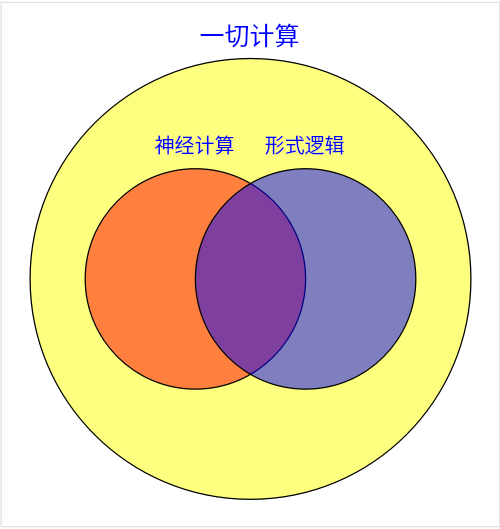

We use three sets to represent the various calculations in the world, corresponding to the three circles in the diagram.

The large circle represents all computations. We can understand all phenomena in nature as computations, because at bottom they are all laws of physics. The vast majority of these computations are so complex - for example, our column on ‘a proton is an ocean’ - that we can’t even write all the equations to deal with them, either with our brains or with computers, but we know that they are computations too.

Inside the big circle, this small circle on the left, represents neural computing, suitable for neural network processing. Our brains and all current AI, including GPT, are here. Neurocomputing is good at finding patterns in things, but is limited in its ability to handle math problems.

This small circle on the right inside the big circle represents ‘formal logic’, where math is located. Here is characterized by precise reasoning, not afraid of complexity, always accurate. As long as you have equations and algorithms, this is where you can work diligently to figure it out for you. This is an area particularly suited to traditional computers.

Neither the human brain, GPT nor computers can handle all the calculations in the world, so two small circles are far from covering the whole big circle. When we engage in scientific exploration, we are trying to expand the scope of the two small circles as much as possible into the unknown territory of the big circle.

The human brain and GPT can also handle part of the formal logic, so there is an intersection of the two small circles; but we can’t handle particularly complicated calculations, so their intersection is not big.

So do you think it’s possible that in the future the GPT will become more and more powerful, so that the small circle on the left completely covers the small circle on the right? That’s not possible. According to Wolfram, the essence of linguistic thinking is to seek laws. And a law is a compression of the objective world. Some things do have laws you can compress, but some things are inherently lawless and cannot be compressed.

For example, we have talked about a game invented by Wolfram in our previous column, in which there is a “Rule No. 30”, which is “Complexity that cannot be approximated”[1]: if you want to know what the future will be like, you can only figure it out step by step honestly, and you can’t “generalize” it.

That’s why GPT can’t do well with complicated math problems; GPT, like the human brain, always wants to look for patterns and take shortcuts, but there are some math problems where there is no other way but to do the math honestly.

What is even more fatal is that GPT’s neural network is purely a “feed-forward” network, which can only go forward without turning back, and has no loops, which makes it unable to perform even normal math algorithms.

- This is the lifeblood of GPT: it’s for thinking, not for performing cold, hard math. *

✵

In this way, while the GPT knows more and reacts faster than the human brain, as a neural network it does not essentially outperform the human brain.

That said, Wolfram has an insight.

What does it say about the fact that a neural network made up of such simple rules can mimic the human brain so well - or at least mimic the human brain’s language system? The common man might say it shows that the GPT is good - whereas Wolfram says it shows that the human brain’s language system is not good.

- The GPT proves that the language system is a simple system! *

The fact that the GPT can write essays shows that computationally, writing essays is a much shallower problem than we think. The nature of human language and the thinking behind it has been captured by a neural network.

In Wolfram’s eyes, language is nothing more than a system of rules, of which there are grammatical and semantic rules. The syntactic rules are simple, while the semantic rules are all-encompassing, including default rules like “objects can move”. People have been trying to list all the logic in language since Aristotle, but no one has ever done it - but now the GPT has given Wolfram confidence.

Wolfram said if you can do it with GPT, I can do it. He intended to replace human language with a computational language - the ‘Wolfram Language’. There are two advantages to doing this. One is accuracy, after all, human language has a lot of ambiguity, not suitable for precise calculation; the other is even more powerful: Wolfram’s language represents the “ultimate compression” of things, representing the essence of everything in the world. ……

Since I’ve heard of Gödel’s incompleteness theorem, I don’t think much of his ambition - but I can think of things that people must have already thought of, so I don’t have any objections, I’m just trying to tell you this.

✵

All in all, the bottom line of the GPT is that it already has the mind of a human brain to a significant degree, despite its simple structural principles. There’s no scientific theory yet that fully explains why it can do it, but it does. the GPT’s lifeblood is also because it’s so much like the human brain: it’s not very good at doing mathematical calculations, it’s not a traditional computer.

This also explains why the GPT is so good at programming, but can’t execute programs on its own: programming is a linguistic act, executing programs is a cold computational act.

Understanding this gives us a bit of a theoretical basis for studying how to tune the GPT. But what I want to say now is that the GPT’s so-called hitches are actually quite easy to remedy.

Don’t you just give it a calculator? Can’t you just get another computer to execute the program for it? Recently OpenAI allowed users and third-party companies to install plug-ins on ChatGPT, which solves exactly this problem …… So GPT is still awesome.

But Wolfram made us realize the fundamental limitations of GPT: * Neural networks have a limited computational range. * We now know that in the future, even if AGI comes out, it will not be possible to step outside of neural computation and formal logic to capture the truths of nature - scientific research ultimately requires you to be in direct contact with nature, and requires the invocation of external tools and external information.

I hope this talk has dispelled some of the charm of the GPT. It is indeed incredibly strong, but it is far from a panacea.

Annotation.

[1] Elite Day Lessons Season 1, A Godly Man’s View of the World

Getting to the point

- GPT’s bottom line: it’s just a language model, but at the same time, it’s amazing.

- GPT’s lifeblood: it’s for thinking, not for performing cold, heartless calculations.

- we need to recognize the fundamental limitation of GPT: neural networks have a limited computational scope.