AI Topic 1: Changes not seen since the Enlightenment

AI Topic 1: Changes Not Seen Since the Enlightenment

ChatGPT makes 2023 the year of AI. Just as the iPhone ushered in the smartphone era in 2007, we are now officially in the age of artificial intelligence. We are honored that Elite Day Class has caught up with this historical moment, and we will make a small topic in our column, with the help of four new books, to talk about the latest understanding and thinking about this era, application scenarios and ways to deal with it.

In this talk, we will start with a book coming out in 2021 called The Age of AI: And Our Human Future.

The three authors of this awesome book are Henry Kissinger, who needs no introduction, Eric Schmidt, the former CEO of Google, and Daniel Huttenlocher, the director of the Schwarzman School of Computing at the Massachusetts Institute of Technology.

The authors are so highly curated, but this is not a paean-type, should-be-read book; there are real ideas in this book, high points of view about the times.

✵

In 2020, MIT announced the discovery of a new antibiotic called Halicin. it’s a broad-spectrum antibiotic that kills bacteria that have become resistant to commercially available antibiotics, and it doesn’t make the bacteria resistant on its own.

This lucky discovery was accomplished using AI. The researchers first made a training set of two thousand molecules with known properties, these molecules have been labeled whether they can inhibit the growth of bacteria, and use them to train the AI. the AI itself learns what the characteristics of these molecules, and sums up a set of “what kind of molecules can be antimicrobial” law.

After the AI model was trained, the researchers used it to examine 61,000 molecules in the FDA’s library of approved drugs and natural products, and asked the AI to select an antibiotic based on three criteria: 1) it has antibacterial properties; 2) it doesn’t look like a known antibiotic; and 3) it must be non-toxic.

As a result, the AI ended up finding only one molecule that met all the requirements, and that was Halicin. the researchers then did experiments to prove that it worked really, really well. It will probably be used in the clinic soon for the benefit of mankind.

This is something that could never be done using traditional research methods: you can’t test 61,000 molecules, that would be too expensive. This is just one of the many use cases of contemporary AI, it’s lucky but it’s not special.

We start with this example because it brings us a clear cognitive shock - the

Halicin can be chemically characterized as an antibiotic in a way that human scientists don’t understand.

Scientists used to have some ideas about what kind of molecules could be antibiotics, such as atomic weights and chemical bonds that should have certain characteristics - but this AI discovery used none of those characteristics. the AI, in the process of training with those 2,000 molecules, found some characteristics that were not known to scientists, and then used those characteristics to discover the new antibiotics.

What were those features? No idea. The whole training model is just a bunch of - maybe tens to hundreds of thousands - of parameters, and humans can’t read the theory out of those parameters.

AlphaZero learned to play chess and Go by playing itself against itself without using any human players at all, and then it easily beat humans. And if you look at its moves, it often makes moves that human players would never think of. For example, in chess it can seemingly just drop important pieces like the queen …… Sometimes you can figure out why it did that afterward, and sometimes you can’t.

The key to this is that the AI’s way of thinking is different from the human rationalization.

That is, the most powerful thing about contemporary AI is not that it’s automated, much less that it’s human-like, but that it’s not human-like: *It can find solutions that are outside the scope of human understanding. *

✵

This isn’t the invention of the automobile instead of the horse, and it’s not just a step forward in time. It was a philosophical leap.

Human beings have been pursuing ‘rationality’ since the time of the ancient Greeks and Romans. In the Enlightenment, people envisioned that the world should be defined by some clear rules like Newton’s laws, and after Kant, people even wanted to regularize morality. We envisioned that the laws of the world should be writable line by line like the letter of the law. Scientists have always classified and divided everything into disciplines, each summarizing its own laws, with the intention of ideally compiling all knowledge into an encyclopedia.

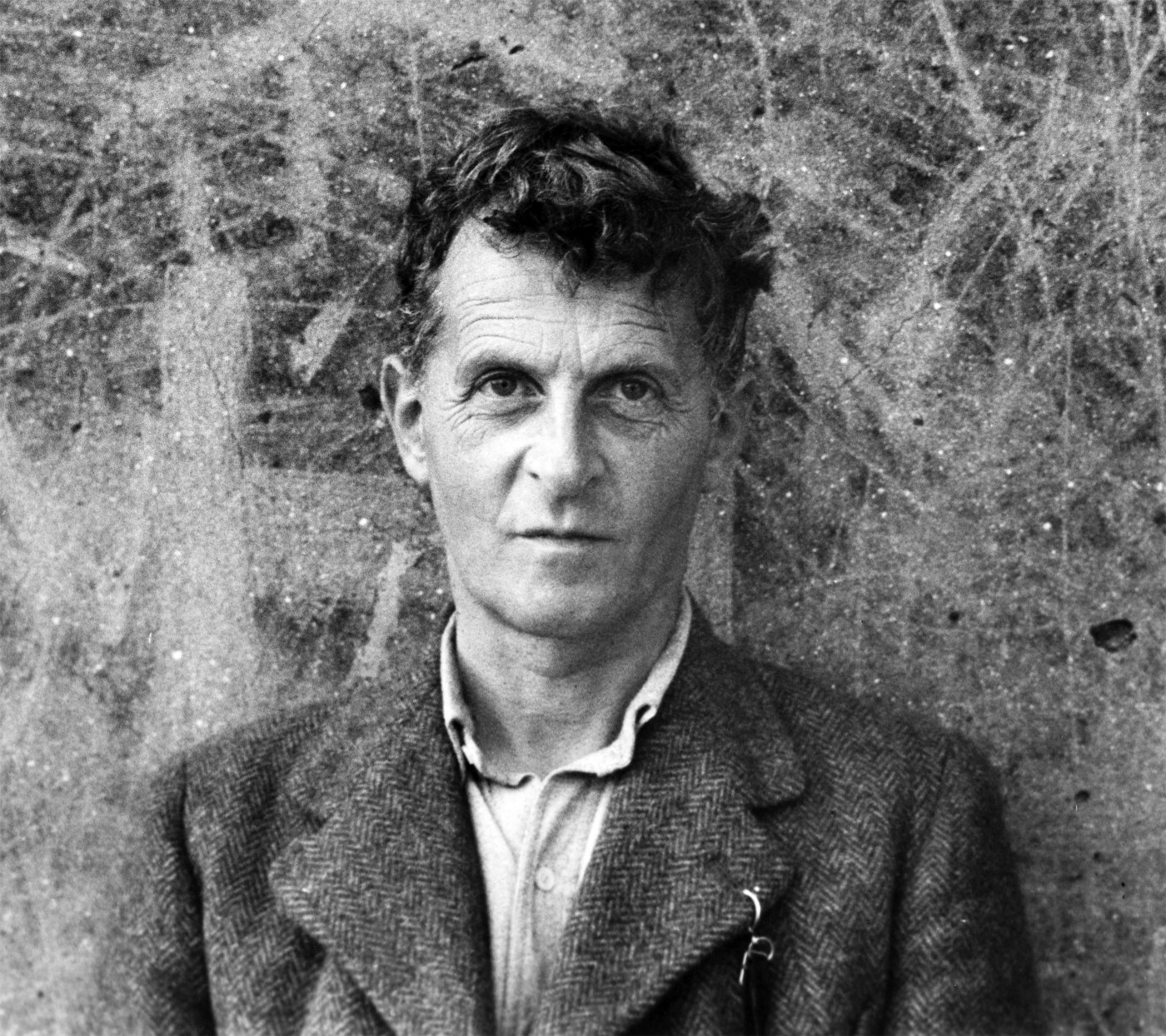

Into the 20th century, however, the philosopher Wittgenstein made a new point. He said that your practice of writing articles categorized by discipline simply cannot exhaust all knowledge. There are always some similarities between things that are vague, unclear, and difficult to explain in words. It is simply not possible to rationalize all of them.

What AI is finding now is precisely the kind of intelligence that is hard to understand and cannot be defined by clear rules. It’s a failure of Platonic rationality and a triumph of Wittgenstein.

You can actually figure this out without AI. For example, what is a “cat”? It’s hard to define exactly what a cat is, but when you see one, you know it’s a cat. This kind of cognition is different from the rule-based rationality that people have been talking about since the Enlightenment, but you could say it’s a kind of ‘feeling’.

A feeling that is difficult to articulate and impossible to tell another person about. Our perception of cats is largely emotional.

And now AI has that feeling. Of course, people have always felt this way, which would have been fine, and Kant recognized that sense perception is indispensable. The problem is that the AI, by feeling this way, has recognized some laws that are incomprehensible to humans. Kant originally believed that only rational cognition could grasp the universal laws of the world.

The AI feels the laws that humans can neither cognize with reason nor feel. And it can do things with that law.

- Humans are no longer the only discoverers and perceivers of the world’s laws. *

Would you say this is a change not seen since the Enlightenment.

✵

Let’s briefly introduce the principle of contemporary AI. Some people talk about AI as if it were a “superintelligence”, as if it were a god, and how it can do things to human beings - that kind of discussion doesn’t make much sense. What are we talking about if the gods have already come down to earth? Don’t push the holy realm.

AI is not a generalized artificial intelligence (AGI), but a very specific kind of intelligence, i.e., a neural network system trained through machine learning.

Before the 1980s, scientists were still trying to use the Enlightenment rationality mindset of feeding problem-solving rules to computers to execute. Then it turned out that that route didn’t work because there were too many rules to get through. That’s what led to neural networks. Now it’s a case of we don’t have to tell AI any rules at all, i.e., we delegate the process of learning about the world to the machine, and you can learn whatever rules you want.

This idea is inspired by neural networks in the human brain, but it’s not exactly the same. Our column previously talked about the basic concept of an AI neural network [1], which is divided into an input layer, many intermediate layers, and an output layer, and a typical deep learning network should have about 10 layers.

Using AI neural networks is divided into two parts: “training” and “inference”. An untrained AI is useless, it only has a well-built network structure and tens or even hundreds of billions of parameters. You need to feed it a large amount of material for training, each material comes in, the network once, the weight of each parameter will be adjusted once. This process is also known as machine learning. When the training is almost done, you can fix all the parameters, and the model is refined. You can then use it to reason about all sorts of new situations and form an output.

The version of the language model that ChatGPT uses, like when I wrote this, is probably GPT-3.5, and it was probably trained between 2021 and 2022. Every time we use ChatGPT, we’re just reasoning with that model, we’re not changing it.

GPT-3.5 has over a hundred billion parameters, and will have more in the future.AI model parameters are growing faster than Moore’s Law. Messing with neural networks is very arithmetic intensive.

✵

- There are now three most popular neural network algorithms, supervised learning, unsupervised learning, and reinforcement learning. *

The AI that discovered a new antibiotic is a classic example of ‘supervised learning’. Given a training dataset of 2,000 molecules, you have to label in advance which of those molecules have an antimicrobial effect and which don’t, so that the neural network can target them during the training process. Image recognition is also supervised learning, where you have to spend a lot of labor labeling what’s in each training image before feeding it to the AI for training.

If the amount of data you want to learn is so large that you can’t label it, you need “unsupervised learning”. You don’t have to label what each piece of data is, the AI will automatically discover the patterns and connections as it looks at it.

For example, the algorithm that Taobao uses to recommend products to you is unsupervised learning; the AI doesn’t care what kind of products you buy, it just finds out what other products the customers who bought the products you bought would also buy.

“Reinforcement learning is learning in a dynamic environment where the AI gets feedback on every move it makes. For example, when AlphaZero plays chess, it evaluates each move to see if it improves or decreases its chances of winning the game, gets an immediate reward or penalty, and constantly adjusts itself.

Autonomous driving is also reinforcement learning. the AI is not static watching a lot of car driving videos, it is directly on the hands, in the real-time environment to do their own actions, directly examining their own each action leads to what results, to get timely feedback.

Let me make a simple analogy-

Supervised learning is like a teacher teaching students in school, right and wrong have a standard answer, but can not give to tell what is the principle;

Unsupervised learning is like a scholar who has researched a lot of content on his own and will know it after reading more;

Reinforcement learning is the training of athletes, which action is wrong immediately give you corrected.

✵

Machine translation would have been typical supervised learning. For example, if you want to do an English-to-Chinese translation, you feed the original English text and the Chinese translation together to a neural network and let it learn the correspondence. But this kind of learning method is too slow, after all, many English works do not have translated versions …… Later, someone invented a particularly advanced approach called ‘parallel corpus (parallel corpora)’.

First, you use the translated version for a period of supervised learning as “pre-training”. After the model has almost found the feeling, you can put a pile of the same topic in English or Chinese, regardless of whether it is an article or a book, do not need each other is a translation of the relationship between a variety of materials are thrown directly to the machine, so that it learns on its own. This step is unsupervised learning, AI for a period of immersive learning, you can guess which paragraph of English should correspond to which paragraph of Chinese. This training is not so precise, but because the amount of data available is much larger, the training effect is much better.

AIs like this that deal with natural language are now using a new technology called ‘transformer’. It can better discover the relationship between words, and allows the order of words to be changed. For example, “cat” and “like” is a subject-predicate relationship, while “cat” and “toy” is a “use” relationship between two nouns. For example, “cat” and “like” is a subject-predicate relationship, and “cat” and “toy” is a “use” relationship between two nouns, which it can discover on its own.

There is also a popular technique called “generative neural networks”, which is characterized by its ability to generate something based on your input, such as a painting, an article or a poem. Generative neural networks are trained by pitting two networks with complementary learning goals against each other: one is called a generator, which is responsible for generating content, and the other is called a discriminator, which is responsible for judging the quality of the content, and the two improve each other with training.

The full name of GPT is “Generative Pre-trained Transformer”, which is a pre-trained, generative model based on transformer architecture.

✵

All current AIs are the result of big data training, and their knowledge depends in principle on the quality and quantity of the training material. However, because of the variety of advanced algorithms now available, AIs have become so intelligent that they can not only predict how often a word occurs, but also understand the relationships between words and have pretty good judgment.

But the most incredible advantage of AI is that it can discover patterns that human reason cannot understand and make judgments accordingly.

AI is basically a black box that swallows a bunch of material and then suddenly says, “I can do it.” You test it and realize that it really knows how to do it, but you don’t know what it will actually do.

Because neural networks are essentially just a bunch of parameters, incomprehensibility is arguably the essential characteristic of AI. The truth is that even OpenAI researchers can’t figure out why ChatGPT works so well.

To put it this way, one could say that we are witnessing the awakening of a new form of intelligence.

Later in this session, I’ve asked ChatGPT to give you five questions on five AI application scenarios to test whether you remember what learning methods they use.

➡️ Click here and try to see how many points you can get.

Annotation

[1] Elite Day Class Season 3, Learn a “Deep Learning” Algorithm

Get to the point

- AI senses a law that human beings can neither recognize nor feel rationally. And it can use this law to do things. Humans are no longer the only discoverers and perceivers of the world’s laws.

- there are now three most popular neural network algorithms, supervised learning, unsupervised learning and reinforcement learning.